Using the Nvidia hardware and software ecosystem to achieve object detection and data streaming dash

- service50558

- 2023年11月21日

- 讀畢需時 5 分鐘

已更新:2023年11月22日

Implementing a Green Lizard Detection and Prevention Dashboard

1. Project Overview

This page serves as an introduction to the open-source project "Green Lizard Detection and Monitoring" within the Nvidia Jetson community. The project utilizes Jetson Nano in conjunction with computer vision-based object detection models to real-time track and present the movements of green lizards.

We also provide the Github repository for this open-source project, allowing readers to replicate the project for quick development when working on similar projects.

2. Project Motivation

What is the Green Lizard?

The Green Lizard, also known as the American Iguana, is a large lizard that lives in trees. It can reach a total length of 1 to 2 meters from head to tail and has a lifespan of over 10 years. The lizard primarily feeds on plant leaves, shoots, flowers, and fruits. It is a diurnal reptile and can lay 24–45 eggs per clutch. Known for its strong reproductive ability and adaptability to different environments, the green lizard poses ecological challenges.

Significance of the Green Lizard Ecological Issue

In Taiwan, abandoned green lizards rapidly reproduce in the wild. The Forestry Bureau of the Council of Agriculture in Taiwan has expressed concerns over the ecological impact of green lizards. They not only harm the ecosystem but also cause agricultural losses by consuming crops. Additionally, their habit of digging holes, especially near riverbanks and fish ponds, damages infrastructure. According to the government's current monitoring efforts, the presence of green lizards has spread to multiple counties and cities.

Establishment of a Green Lizard Monitoring System

With the recent advancements in edge devices, we have the opportunity to develop and deploy real-time computer vision applications for detecting and monitoring the movements of green lizards. Through the implementation of a system with real-time alerts and notifications, we can take timely measures to prevent asset losses caused by green lizards.

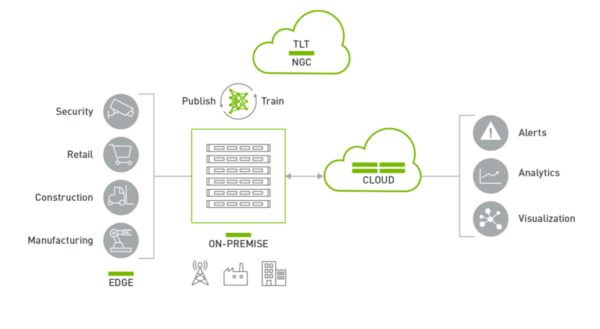

Software Architecture

3. Data Collection

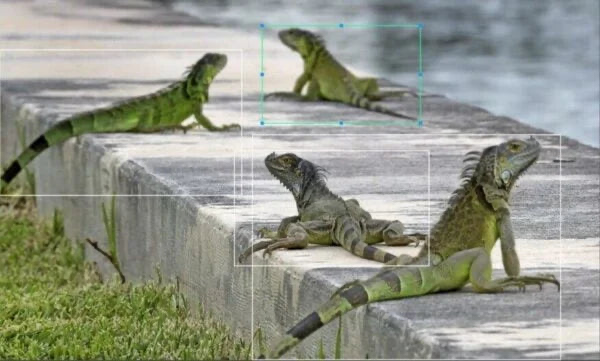

Green Lizard Image Labeling Illustration

Image Crawler

Through a dynamic web crawler using Selenium, we collected around 5000 images of green lizards and individually labeled each one. We also provide downloads for the image and label data; please refer to our image labeling tutorial.

Image Labeling

For collaborative labeling, we used the web-based object detection labeling tool makesense.ai due to its simple and user-friendly features, eliminating the need for any software installation.

You can find the tutorial documentation for using this tool at this link.

4. Training the Green Iguana Detection Model using Nvidia TLT

Nvidia Transfer Learning Toolkit

Introduction to Nvidia Transfer Learning Toolkit (TLT)

Creating an AI/ML model from scratch to solve business problems is a highly costly process. Therefore, transfer learning is often used during project development to expedite the process. Transfer learning is a subfield of machine learning that focuses on leveraging existing solution models, such as pre-trained models, for different but related problems.

NVIDIA's Transfer Learning Toolkit (TLT) is precisely a toolkit for transfer learning, providing popular pre-trained models for image and natural language processing tasks. TLT is primarily designed for deployment purposes, integrating features for size reduction and optimization of models after training.

In the ideal scenario, using TLT for model training only requires setting training parameters, eliminating the need to write code from scratch. TLT realizes an end-to-end process for training, optimization, and deployment, significantly differing from typical development workflows and greatly reducing project development time and technical costs.

Hardware Requirements

The recommended hardware configuration to run Nvidia Transfer Learning Toolkit (TLT) is as follows:

- 32 GB system RAM

- 32 GB of GPU RAM

- 8-core CPU

- 1 NVIDIA GPU

- 100 GB of SSD space

Software Requirements

NVIDIA TLT Installation Guide

We provide a detailed installation guide for NVIDIA TLT. Please refer to our prepared environment setup tutorial for step-by-step instructions.

TLT Model Training and Optimization

The object detection model used in this project is YOLOv4. You can refer to the YOLOv4 training example code with slight modifications to train the model using the images and labels of green iguanas.

After running the code and waiting for the completion of the process, it will automatically export a .etlt file, which is the final model file for deployment.

Note: Sample code for quick hands-on experience with TLT's computer vision functionalities can be found in the official link. Most of the examples require minimal parameter adjustments for one-click training, optimization, and export.

5. Deploying the Model on Jetson Devices

Introduction to Deepstream

After completing model training, the next step is to deploy it on Jetson devices for real-time inference, while also streaming images and inference results. We use Nvidia DeepStream, a framework specifically designed for inference and data streaming.

DeepStream is an end-to-end framework that facilitates deep learning inference, image and sensor processing, and the transmission of insights to the cloud in streaming applications. You can construct cloud-native DeepStream applications using containers and deploy them at scale through Kubernetes coordination. When deployed at the edge, applications can communicate between IoT devices and cloud-standard message brokers (such as Kafka and MQTT) for extensive wide-area deployments.

Introduction to TensorRT

NVIDIA TensorRT™ is an SDK designed for deep learning inference. The SDK includes a deep learning optimizer runtime environment, enabling deep learning applications to achieve low latency and high throughput. Before deploying a model, TensorRT is used to transform the model, accelerating the runtime speed during inference.

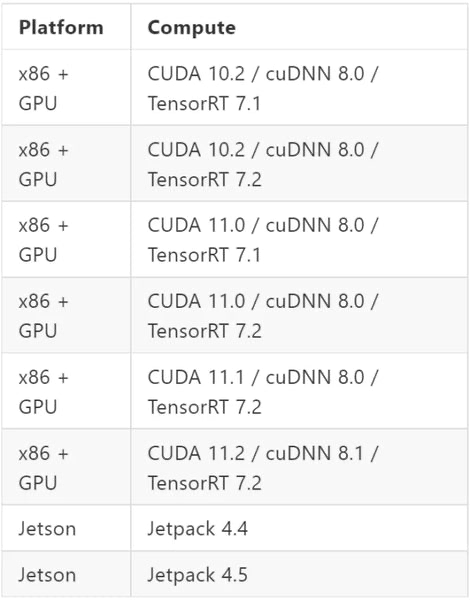

TLT, oriented towards deployment, is a model training framework that inherently includes transformation capabilities for post-training models. Simply using the tlt-converter allows the TensorRT inference engine to build from the .etlt output model trained by TLT. Additionally, hardware and software for deployment on devices require conversion using the corresponding version of tlt-converter. The following are all configuration options:

Installing DeepStream Environment

We have documented the steps for installing the DeepStream environment, as well as the process of building the TensorRT engine for the .etlt model. This includes methods for installing other packages needed for this project, such as MQTT client, and more. For

detailed installation steps, please refer to our prepared environment setup tutorial.

Running DeepStream

deepstream_app/deepstream_mqtt_rtsp_out.py is the configuration for running deepstream python binding. This program performs object detection inference, streams images in RTSP format, and sends the resulting inference data to the MQTT broker, storing it in the database for future visualization.

6. Data Dashboard

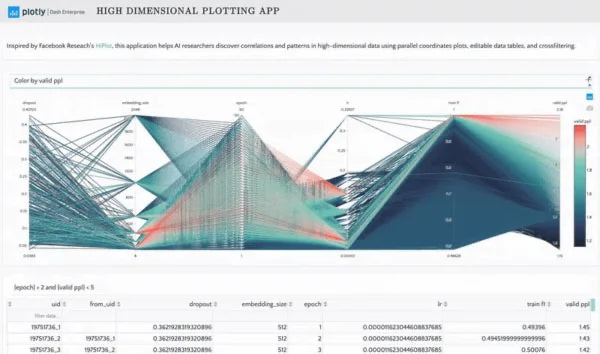

Plotly Dash

Plotly Dash is a framework for interactive data visualization. It allows writing a frontend page for data visualization using non-front-end languages. The other sub-packages extended by this package simplify the web style and layout process, making it ideal for quickly developing web-based data visualization.

In addition, the live-update feature provided by this package allows the web page to continuously update dynamically, making it especially suitable for the dashboard requirements of this project.

Dash Bootstrap Components

Bootstrap is an open-source front-end framework for website and web application development. It includes frameworks for HTML, CSS, and JavaScript, providing fonts, forms, buttons, navigation, and various components, as well as JavaScript extension packages. Its goal is to make the development of dynamic web pages and web applications easier.

Using Dash Bootstrap Components allows for the direct inheritance of the frameworks defined by Bootstrap, enabling the quick and easy layout configuration of web pages.

MQTT broker

Eclipse Mosquitto

Users can choose an MQTT broker based on their preferences. In this project, we use Eclipse Mosquitto. Once installed, simply start the MQTT broker to begin receiving data.

Database Setup

MySQL

This project uses MySQL to set up the database. Users can choose their preferred database type. The purpose of the database is to write the data flow from Jetson Nano to the MQTT broker into the database. Plotly Dash periodically updates the data and presents the latest data on the dashboard.

MQTT Topic Subscription and Data Writing to Database

When Deepstream is running on Jetson Nano, it continuously sends inference result data to the MQTT broker through the MQTT communication protocol. By subscribing to this specific topic, you can receive notifications when the latest data flows into a particular topic and write the data to the database. The script for this process is located at mqtt_topic_subscribe/mqtt_msg_to_db.py.

Run the Web Dashboard

Deepstream Deployment and Execution

MQTT broker runs on the data server

Database runs on the data server

With the above conditions, the web dashboard can start running. Please note that the configuration of Deepstream is adjustable, so if users want to replace the model with another object detection model in the future, they only need to modify the configuration files and Deepstream Python executable.